There’s a point most teams hit once synthetic data becomes part of their workflow.

Generation speeds up. Coverage improves. Models start benefiting from scenarios that would be difficult or impossible to capture in the real world. Things are moving in the right direction.

Then, almost unnoticed, synthetic data storage becomes the bottleneck that brings all that momentum to a halt.

Not because anything breaks. But because synthetic data accumulates differently. Datasets grow faster. Variations pile up. Old versions stick around “just in case.” Over time, managing the data becomes harder than generating it.

As more AI training pipelines shift toward synthetic data, storage stops being just infrastructure. It becomes part of how well the system works.

Why Synthetic Data Puts Pressure on Storage

Synthetic data tends to stress systems in ways traditional datasets don’t.

The first reason is volume. Once teams are no longer constrained by real-world collection, it’s easy to generate far more data than originally planned. A few terabytes turns into dozens as scenario coverage expands.

The second is change. Synthetic datasets aren’t static. Parameters get tweaked. New edge cases are added. Assumptions evolve. Each iteration produces a new dataset version, and most teams hesitate to delete anything that might be useful later.

The third is structure. Synthetic data usually comes with detailed metadata like camera configurations, sensor models, object states, environmental conditions, physics parameters. That information is critical, but it’s often managed separately from the data itself, which makes it harder to track over time.

Finally, there’s performance. Training jobs read the same data repeatedly and expect storage to keep up. When it can’t, GPUs wait, training slows down, and costs rise.

None of this happens all at once. Storage expands silently. Pipelines slow. The past becomes harder to revisit. And when storage finally demands attention, it does so with a price tag attached.

Rethinking What Actually Needs to Be Stored

One of the most useful questions teams can ask is also one of the simplest:

Do we really need to store all of this data?

In many physics-based synthetic data pipelines, the dataset itself isn’t the only, or even the primary, source of truth. The parameters that generated it matter just as much.

- Camera placement.

- Lighting values.

- Materials.

- Physics settings.

- Scene definitions.

When generation is deterministic, those parameters define the dataset. Store them, and the data can be recreated exactly.

That changes the equation. Instead of archiving large volumes of data indefinitely, teams can store lightweight metadata and regenerate datasets when they’re actually needed. Compute replaces storage as the primary cost.

For a surprising number of workloads, that trade-off works in their favor.

Leveraging Regeneration to Minimize Storage

Regenerating synthetic data can be a powerful lever for reducing synthetic data storage overhead and simplifying long-term data management when the generation method supports it.

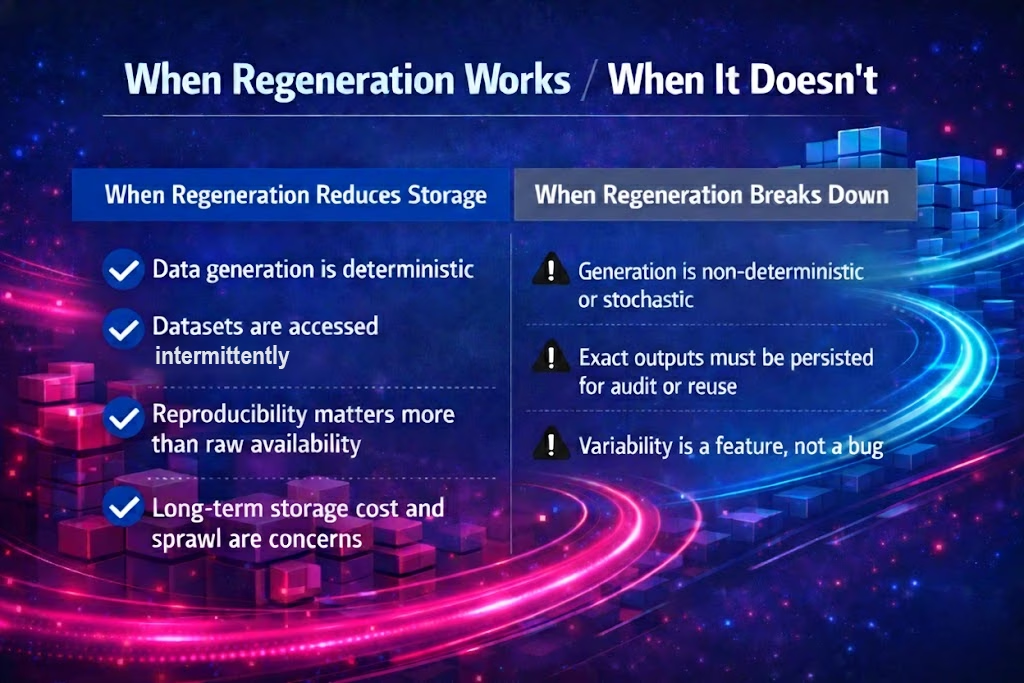

Regeneration is viable when data generation is deterministic, datasets are accessed intermittently rather than continuously, reproducibility is required, and storage growth is a concern. In these cases, persisting generation parameters instead of rendered outputs dramatically reduces storage footprint. Experiments remain reproducible without maintaining large volumes of archived data.

This approach does not apply to non-deterministic generation. Prompt-driven generative models are inherently stochastic, producing different outputs for the same inputs. As a result, outputs must be stored if they need to be referenced, validated, or reused.

That distinction, deterministic versus non-deterministic generation, has downstream implications for storage architecture, versioning, auditability, and cost.

While both generative AI and physics-based simulation produce synthetic data, they behave differently once these constraints are considered. Generative models prioritize variability but require post-generation storage and quality management. Physics-based simulation produces repeatable outputs with precise ground truth, enabling regeneration, traceability, and simpler long-term data management.

Ultimately, the generation method determines not just how data is created, but how it must be stored, reproduced, and governed over time.

What Scalable Synthetic Data Storage Looks Like

Teams that handle synthetic data well rarely rely on a single storage system. Instead, they separate concerns and let data move through layers over time.

Active training data lives close to the GPUs on fast local storage. The goal here is simple: keep accelerators fed without delay.

Recently used datasets often move to a shared, high-performance layer where multiple jobs can access them without duplication.

Older data lands in object storage, where cost matters more than latency and access is less frequent.

And in physics-based workflows, there’s often another layer entirely: metadata-only storage. Parameter files, configuration manifests, and version history tracked much like source code.

The exact technologies vary, but the pattern is consistent. Data that’s no longer active shouldn’t live forever in the most expensive tier.

Versioning Is Where Things Usually Get Messy

Synthetic data changes too quickly for informal versioning to hold up.

Teams often discover this when they try to answer basic questions: Which dataset trained this model? What changed between versions? Can we reproduce a result from last quarter?

Copying entire datasets for each change works early on, but it scales poorly. Storage grows rapidly, and relationships between datasets become harder to understand.

Deterministic pipelines make this simpler. When parameters define the dataset, versioning becomes manageable. Changes are explicit. History is clear. Reproducing older results is straightforward.

For data that must be stored, copy-on-write and deduplication help reduce overhead, but they don’t eliminate the underlying cost of retaining every generated sample.

Storage Performance Still Shapes Training Speed

Even with good lifecycle management, performance matters.

Large training runs can involve reading massive amounts of data over time. If storage can’t deliver consistent throughput, training stretches out and GPUs spend more time waiting than computing.

Teams typically address this by:

- Bundling data into larger files instead of millions of small ones

- Using parallel data loaders

- Caching aggressively on local SSDs

- Keeping storage physically close to compute

In some physics-based pipelines, teams skip disk altogether and generate data on demand during training. When generation is fast enough, this removes an entire class of bottlenecks.

Cost Control Starts With Early Design Choices

Synthetic data storage costs rarely spiral because of a single bad decision. They grow because systems are designed with the assumption that everything needs to be stored indefinitely.

Synthetic data challenges that assumption.

Some datasets are cheaper to regenerate than to keep. Others lose value once training is complete. Without clear lifecycle rules, data accumulates quietly and expensively.

The biggest savings usually come from architectural decisions made early, not from tuning costs later.

Closing Thoughts

Synthetic data is becoming a foundational part of modern AI systems. As that happens, storage decisions start to influence far more than cost. They affect training speed, reproducibility, and how confidently teams can build on past work.

The teams that scale successfully tend to share a mindset: they treat data as something that can be recreated, not just accumulated.

When synthetic data storage is designed with that perspective, it stops being a quiet constraint and starts supporting the pace of development synthetic data makes possible.

Want to Go Deeper?

- Explore whether regeneration fits your storage model

- See how deterministic simulation changes storage requirements

- Talk through a storage-aware synthetic data strategy